The past few years have been dominated by AI – the power of AI, the need for AI, the future of AI, etc. While many of us have started to recognize and tune out the hyperbole, that hasn’t diminished the fact that AI is, and will continue to be a hugely important topic, tool and technology.

But as businesses and the world at large race to embrace, develop and implement their AI strategies, the need for high-performance compute at scale has never been higher. This year will be a race with no finish line in sight – a race to deliver the infrastructure that the world needs to keep pushing the AI envelope.

Key AI datacenter trends to watch in 2026

So, what does that mean for datacenters and their value chain? There are some key trends that will both dictate direction and drive the industry forward. These are the key AI datacenter trends shaping 2026.

The need for speed, through modular design

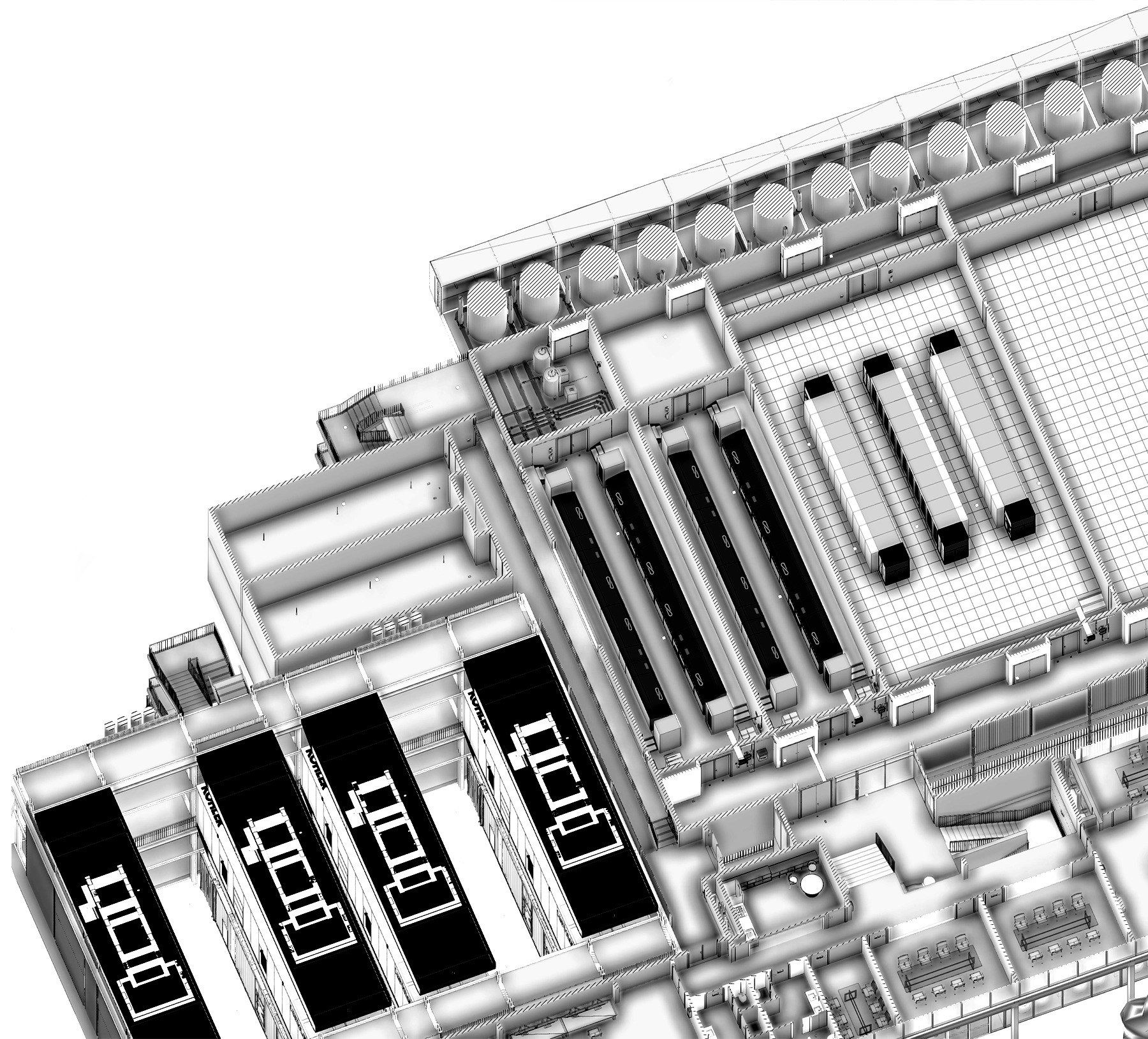

The technology industry has always moved fast, but the need and unprecedented demand for AI has resulted in a similarly unprecedented demand for datacenter infrastructure to deliver it. This year we’ll be seeing the time from design to deployment for new datacenters shorten considerably.

Several key factors will contribute to this expedited deployment. Standardised and reference designs for AI datacenters will significantly reduce planning stages, while modular construction will allow for faster build times and scalability. Also, a single source datacenter infrastructure partner like Submer, with accountability over the entire supply chain, can better guarantee timelines and reduce risk across the entire process.

One of our goals for 2026 is to deploy AI datacenters faster than ever and keep our customers ahead in the AI race.

Brownfield datacenters gain momentum in the AI era

A greenfield datacenter build will benefit from complete flexibility of design and construction, along with the potential to bake in headroom for expansion and scaling. But ensuring that a greenfield site has access to the necessary utility and network infrastructure – power, water, fiber, etc. – is a major consideration. There’s also the challenge of obtaining the required permits and dealing with any local objections to the proposed build.

By contrast, a brownfield datacenter build will inherit the existing infrastructure, already have the required permits, and will not be subject to local objections. While the scope of flexibility and scale may not be as extensive, a brownfield build can deliver something even more valuable – deployment speed. Moreover, many existing locations run inefficiently, with high PUEs and large overhead costs. Retrofitting such datacenters come with the added benefit of unlocking extra room for compute. In 2026 where the race to deliver more AI compute is paramount, going brown can help deliver that need for speed.

The productization of datacenters

Traditionally, datacenters have been seen as unique projects, requiring significant planning, development, build and deployment cycles. The custom nature of datacenters contributed to the scale and complexity of the project, and the deployment time associated with it. However, a distinct change is happening in our industry, a move where datacenters are no longer classified as projects and rather seen as products.

This productization of datacenters will be driven by defined reference designs and modular construction, allowing standardised datacenter products to be rolled out significantly faster and more cost effectively.

Liquid cooling, an essential for high-density AI infrastructure

Heat has always been a challenge when designing and deploying datacenters, but ever-more powerful high-density compute required by AI workloads demands a level of cooling far beyond the traditional air-cooled solutions.

In 2026 liquid cooling is no longer optional, it’s imperative. High-density racks demand liquid cooling solutions to ensure optimal performance and resilience. Today, any datacenter partner must have experience and expertise in liquid cooling – both Direct Liquid Cooling and immersion – to build out effective AI infrastructure.

With its unrivalled experience in liquid cooling, Submer is ideally placed to develop the datacenter infrastructure that the age of AI demands.

The era of Edge

The age of AI has brought with it the need for large scale, high-performance datacenters, or AI factories. But while these huge compute palaces are integral to delivering AI and HPC workloads, there is also a clear need for more localised, low latency data processing.

We’ll undoubtedly be seeing more need for and investment at the Edge, bringing compute closer to where the data is being captured, and removing the latency that would otherwise be introduced by utilising a datacenter in the cloud. Whether it’s the explosion of IoT devices and sensors, or the implementation of autonomous vehicles, the need to gather and process data with as little delay as possible will drive more compute to the edge, creating an edge-to-core datacenter model.

While it’s the massive AI factories grabbing all the headlines and exposure right now, Edge compute will become a major focus in 2026.

Power strategy and AI growth zones reshape datacenter planning

While brownfield datacenter builds can circumvent some of the early stage challenges, such as utility infrastructure and permits, there will still be a need for new greenfield sites. And when you’re working with a blank canvas, ensuring there is adequate power for the planned build is crucial.

With governments pushing for both AI investment and AI sovereignty, we could see incentives to encourage the construction of AI factories in certain territories. The UK, for example, has already announced a plan for AI Growth Zones, ensuring adequate power and fast-tracked planning for datacenter builds in these areas. We can also expect to see more use of alternative power to facilitate datacenter needs – solar, wind, hydro, etc. Not only can alternative energy make an otherwise unsuitable site viable, it can also improve the sustainability goals of the build.

Supercharged silicon, driving higher density AI compute

Even with the slowdown of Moore’s Law, it’s impossible to deny the exponential increase in compute power over time, and that performance will only continue to increase in 2026. As fabrication processes continue to shrink , the core density and performance of AI superchips will keep rising.

Put simply, the more transistors that can be packed into each silicon chip (manufactured from a single silicon wafer), the less physical space will be required to deliver a defined level of performance. Increased performance per chip equates to more performance per rack, and exponentially increased performance across an entire datacenter.

For anyone who has worked in high performance computing for a while, the speed of progress and increased performance delivered by each new generation of chip isn’t surprising. But with workloads becoming ever-more complex, the need for more powerful, higher density compute has never been greater.

2026 has only just begun, but it’s already clear that some of the AI datacenter trends above will shape how datacenters evolve over the coming months and beyond. One thing is certain, the need for datacenters and cloud infrastructure is only going to increase, but the efficiency and sustainability of that infrastructure must keep pace.